Introduction

In this post, we will use a cool project from Google; The Mediapipe, to build a code snippet that is able to identify if a person is drowsy. This could be applicable to monitor people while driving or while performing dangerous tasks that require being fully awake.

MediaPipe offers cross-platform, customizable ML solutions for live and streaming media. Learn more about the Mediapipe project here

The idea is simple: we will monitor your eyes. If your eyes are closed for some time, then we will show an alert. Now we have to make a list of the things we need to provide a solution. Here is the list of things:

- Camera. We need a camera to monitor in real-time, the person's eyes to identify if he/she is falling asleep. For this exercise, we will use our PC or laptop webcam. the OpenCV library has already all the tools to capture each frame from a video stream in real-time.

- Capture Eyes Metadata. Fortunately, the Google Mediapipe library has a python wrapper (because it was originally accessible only in C++) that provides facial landmarks that we can use to capture the contour around the eyes.

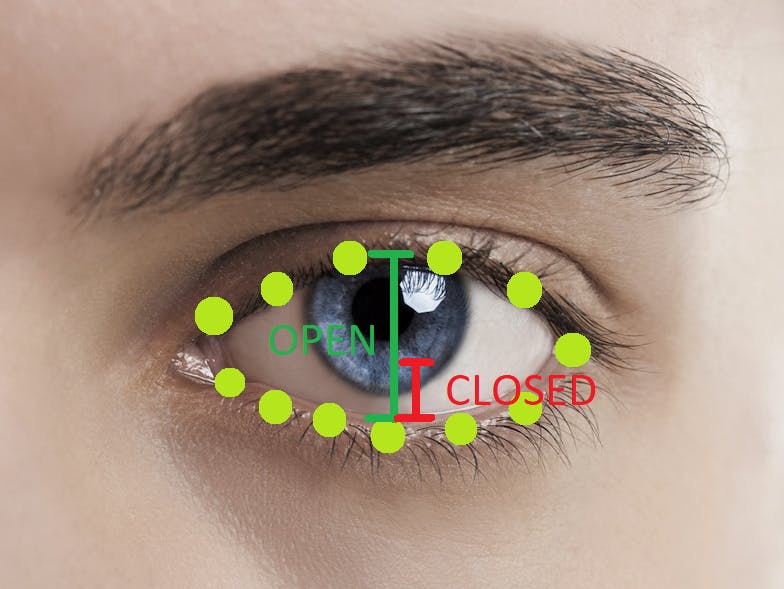

- Determine if eyes are open or closed. The metadata captured from the Mediapipe library is an array of [x,y] positions of each landmark in the face (as seen in the image below). We need to filter them to get only the points around the right and left eyes. Once these arrays are available, we will estimate the height of each eye. We will make a condition that if an eye is half-opened during k frames, then we will raise an alert. We will use k = 20 in our example.

Ok, now that we have the idea of how to solve this, let's start with the fun part. Time to build the prototype.

Load the Libraries

Mediapipe is available with pip, so use pip install mediapipe to download the library.

import cv2 as cv

import numpy as np

import mediapipe as mp

Eye Height Function open_len(arr)

The Mediapipe will output the points around both eyes as a collection of [x,y] points. The idea is to identify the highest and lowest, y-values for each eye, so that we can use them to calculate how open each one is. The following function will take the array from Mediapipe and calculate the height of each eye as the difference between the max-min y values of the [x,y] point collection.

def open_len(arr):

y_arr = []

for _,y in arr:

y_arr.append(y)

min_y = min(y_arr)

max_y = max(y_arr)

return max_y - min_y

Functional Prototype

mp_face_mesh = mp.solutions.face_mesh

# A: location of the eye-landamarks in the facemesh collection

RIGHT_EYE = [ 362, 382, 381, 380, 374, 373, 390, 249, 263, 466, 388, 387, 386, 385,384, 398 ]

LEFT_EYE = [ 33, 7, 163, 144, 145, 153, 154, 155, 133, 173, 157, 158, 159, 160, 161 , 246 ]

# handle of the webcam

cap = cv.VideoCapture(0)

# Mediapipe parametes

with mp_face_mesh.FaceMesh(

max_num_faces=1,

refine_landmarks=True,

min_detection_confidence=0.5,

min_tracking_confidence=0.5

) as face_mesh:

# B: count how many frames the user seems to be going to nap (half closed eyes)

drowsy_frames = 0

# C: max height of each eye

max_left = 0

max_right = 0

while True:

# get every frame from the web-cam

ret, frame = cap.read()

if not ret:

break

# Get the current frame and collect the image information

frame = cv.flip(frame, 1)

rgb_frame = cv.cvtColor(frame, cv.COLOR_BGR2RGB)

img_h, img_w = frame.shape[:2]

# D: collect the mediapipe results

results = face_mesh.process(rgb_frame)

# E: if mediapipe was able to find any landmanrks in the frame...

if results.multi_face_landmarks:

# F: collect all [x,y] pairs of all facial landamarks

all_landmarks = np.array([np.multiply([p.x, p.y], [img_w, img_h]).astype(int) for p in results.multi_face_landmarks[0].landmark])

# G: right and left eye landmarks

right_eye = all_landmarks[RIGHT_EYE]

left_eye = all_landmarks[LEFT_EYE]

# H: draw only landmarks of the eyes over the image

cv.polylines(frame, [left_eye], True, (0,255,0), 1, cv.LINE_AA)

cv.polylines(frame, [right_eye], True, (0,255,0), 1, cv.LINE_AA)

# I: estimate eye-height for each eye

len_left = open_len(right_eye)

len_right = open_len(left_eye)

# J: keep highest distance of eye-height for each eye

if len_left > max_left:

max_left = len_left

if len_right > max_right:

max_right = len_right

# print on screen the eye-height for each eye

cv.putText(img=frame, text='Max: ' + str(max_left) + ' Left Eye: ' + str(len_left), fontFace=0, org=(10, 30), fontScale=0.5, color=(0, 255, 0))

cv.putText(img=frame, text='Max: ' + str(max_right) + ' Right Eye: ' + str(len_right), fontFace=0, org=(10, 50), fontScale=0.5, color=(0, 255, 0))

# K: condition: if eyes are half-open the count.

if (len_left <= int(max_left / 2) + 1 and len_right <= int(max_right / 2) + 1):

drowsy_frames += 1

else:

drowsy_frames = 0

# L: if count is above k, that means the person has drowsy eyes for more than k frames.

if (drowsy_frames > 20):

cv.putText(img=frame, text='ALERT', fontFace=0, org=(200, 300), fontScale=3, color=(0, 255, 0), thickness = 3)

cv.imshow('img', frame)

key = cv.waitKey(1)

if key == ord('q'):

break

cap.release()

cv.destroyAllWindows()

Code Sections Explained

A: RIGHT_EYE and LEFT_EYE are the arrays of the indexes of the points around the eyes in the face-landmark collection. We will use these indexes to filter the results from the mediapipe.

B: drowsy_frames is the variable used to accumulate how many frames the user closed the eyes at least 50% of the height of the eye.

C: max_left and max_right will capture the maximum height recorded for each eye while open.

D: This is where the mediapipe uses the rgb_frame and searches for landmarks. This is basically the predict method of the mediapipe FaceMesh functionality.

E: results.multi_face_landmarks contains all the landmarks found. So if any, let's do something, otherwise, ignore it.

F: all_landmarks contain all face landmarks found in the image by media pipe. This includes all the face area, not only the eyes.

G: we filter the all_landmarks array with the indexes from RIGHT_EYE and LEFT_EYE from section A. This will return two lists of the form [[x,y],[x,y],...,[x,y]] that contains only the points around the left and right eye.

H: this is for debugging. We will use OpenCV polylines method to draw the lines around the eyes from the right_eye and left_eye landmarks captured in section G.

I: Estimate the height of each eye.

J: keep track of the max height of each eye.

K: This is the drowsy condition. If eyes are half-open add 1 to the drowsy_frames counter. Otherwise, initialize the variable to 0 to start counting again.

L: If the user has drowsy eyes for more than 20 frames, then show the ALERT text on the screen.

This is the way... of mediapipe

Get the Full Code

You can get the Gist here!