Boostraping

Bootstrapping is a resampling technique with replacement; that is, we can choose on every sample a subset of elements that might be repeated.

The bootstrap method is a statistical technique for estimating quantities of a population by averaging estimates from multiple small data samples (Brownlee, 2018).

The Algorithm To Create One Sample

N = size of Dataset (population)

n = target size of sample

cn = current size of the sample

s = sample

While (cn < n)

Randomly select an item i from the dataset D

Add the randomly selected item i to s.

cn++

This creates subset s full of randomly selected samples from D. This process can be repeated k times.

Boostraping with Scikit-Learn

In Scikit-Learn, we can get a random sample of a dataset D with the resample method. Let's select from a 4-row matrix 3 samples of two rows each.

import numpy as np

from sklearn.utils import resample

X = np.array([[1., 0.], [2., 1.], [0., 0.], [2,4]])

array([[1., 0.], [2., 1.], [0., 0.], [2., 4.]])

resample(X, n_samples=2)

array([[1., 0.], [1., 0.]])

resample(X, n_samples=2)

array([[0., 0.], [2., 4.]])

resample(X, n_samples=2)

array([[2., 4.], [0., 0.]])

Cross-Validation

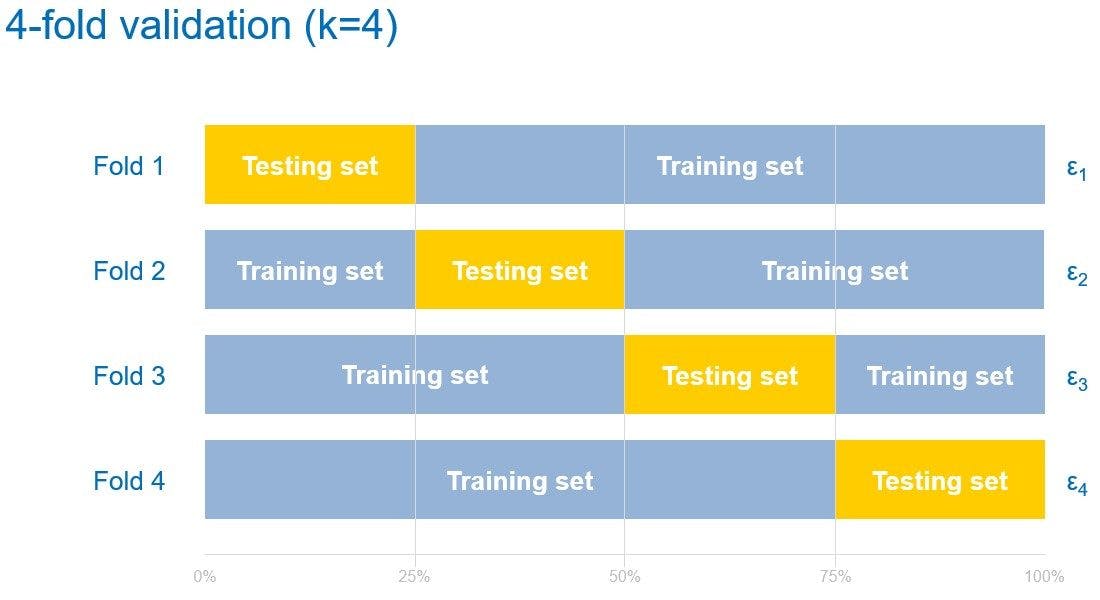

Cross-validations is a very similar technique to Bootstrapping, with the difference that it selects its samples without replacement; that is, there are no repeated elements in every subset. Selecting k-samples with Cross-Validation is called K-Fold CrossValidation. Usually, on each cross-validation exercise, we define a portion of the selected sample to be the train and the other the test set (for example 70/30 or 80/20). The type of cross-validation that selects a test set with one single example is called LOOCV (leave one out cross-validation).

Subsetting a dataset using LOOCV is computationally expensive, so with usually use k-fold cross-validation with a k = {4, 5, 7, 10}

In k-fold cross-validation, the original sample is randomly partitioned into k equal sized subsamples. Of the k subsamples, a single subsample is retained as the validation data for testing the model, and the remaining k − 1 subsamples are used as training data. The cross-validation process is then repeated k times, with each of the k subsamples used exactly once as the validation data. The k results can then be averaged to produce a single estimation.

Cross-Validation with Scikit-Learn

import numpy as np

from sklearn.model_selection import KFold

X = np.array([[1, 2], [3, 4], [1, 2], [3, 4]])

y = np.array([1, 2, 3, 4])

kf = KFold(n_splits=2)

kf.get_n_splits(X)

for train_index, test_index in kf.split(X):

print("TRAIN:", train_index, "TEST:", test_index)

X_train, X_test = X[train_index], X[test_index]

y_train, y_test = y[train_index], y[test_index]

KFold(n_splits=2, random_state=None, shuffle=False) TRAIN: [2 3] TEST: [0 1] TRAIN: [0 1] TEST: [2 3]

So Bootstrapping or Cross-Validation

- Bootstrapping selects samples with replacements that can be as big as the dataset.

- Cross-validation samples are smaller than the dataset.

- Bootstrapping contains repeated elements in every subset. Bootstrapping relies on random sampling.

- Cross-validation does not rely on random sampling, just splitting the dataset into k unique subsets.

- Cross-validation is usually used to test an ML model's generalization capabilities.

- Bootstrapping is used more for statistical tests, ensemble machine learning, and parameter estimation.

If you are still not sure which one to use for testing your ML model, just go with cross-validation.

Example of Cross-validation with a ML Model

from sklearn import datasets, linear_model

from sklearn.model_selection import cross_val_score

diabetes = datasets.load_diabetes()

X = diabetes.data[:150]

y = diabetes.target[:150]

lasso = linear_model.Lasso()

print(cross_val_score(lasso, X, y, cv=3))

[0.33150734 0.08022311 0.03531764]

In this example, we are applying Lasso to predict diabetes progression after one year. With k = 3, we obtained accuracy scores of 33%, 8%, and 35%. If we average them we can say that the cross-validation accuracy score is mean(33%, 8%, and 35%) = 25.3%.

Now, you can test this same code with another ML model such as Linear Regression OLS. If the new model has a better cross-validation accuracy score, then you can be certain that the new model performs better than the other one. Cross-validation is excellent for model selection.